How Can Better Business Models be Created?

By Understanding the Technology

Business schools claim that it is not the technology, it is the business model but to understand the two most critical aspects of the business model, the value proposition, and customers, one must understand the technology. What does the technology offer that is better than the previous technology? Will the advantages translate into lower costs and/or a higher willingness to pay, and thus happy customers, profits for investors, and good pay for employees? And how do we translate these advantages into a value proposition, one that should evolve as the technology gets better?

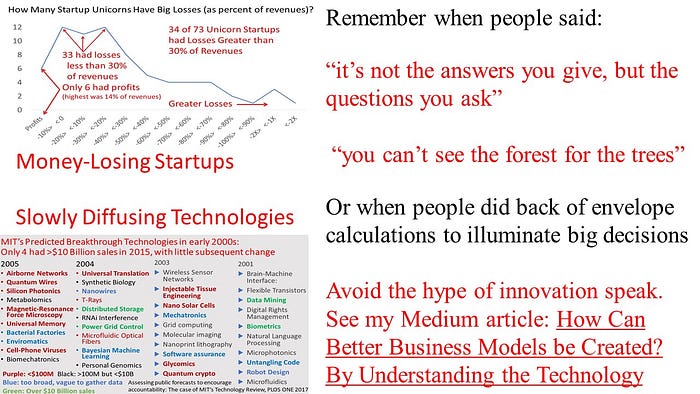

Advantages and value propositions are only relevant when a specific context is presented, which can be called an application. Silicon Valley used to talk about killer applications, applications for which the advantages and value propositions are the largest for a specific new technology. For some reason, the importance of finding these killer applications and the customers who want these applications has been forgotten, perhaps lost in the hype of venture capitalists and entrepreneurs that has resulted in huge startup losses[1].

Value propositions, killer apps, and customers are all connected and thus a good business model presents a coherent story that ties them together with the technology. One cannot understand the former without the latter and claiming that technology is not part of this coherence is foolish, partly reflecting an attempt by some to distract others from their lack of technological understanding.

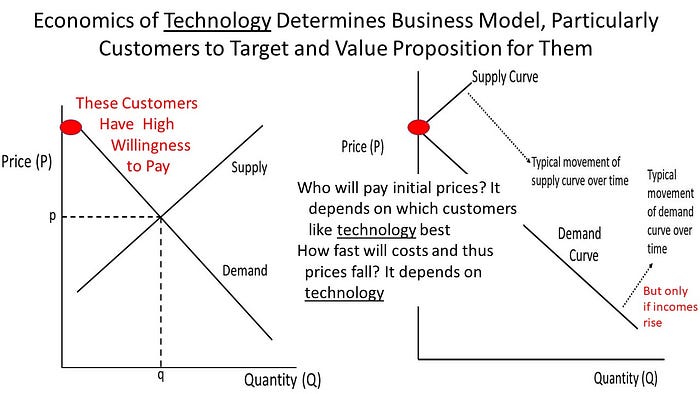

Central to this argument is the choice of customers that will be targeted because they will benefit more from the technology than will other customers. For every technology, the seller must have a good understanding of who will benefit the most from a technology and thus who will be the first customer? If you said everyone, then you just failed. Almost every technology begins with a niche application that represents a small number of unusual customers. Personal computers began with do-it yourself engineers in the late 1970s and within five years word processing and spreadsheets had emerged as killer applications. E-commerce began with books and music, because of the huge variety, but quickly evolved into electronics and other products. The first hints of smartness in phones were text messages by young people and young people became first big users of many smart phone apps.

You are probably thinking that these technologies diffused to everyone so who cares who the first customers are. The problem with that argument is that you would have gone out of business if you did not target the right customers initially. Thus, innovators must have a good idea of where customers sit on the demand curve; who has a high willingness to pay and who have a low willingness to pay? Without this understanding, failure is likely.

Performance is also important and thus one could plot performance instead of price against demand. Some customers want high performance, and some are happy with low performance. Ideally, we would like to plot both performance and price vs. demand but this is hard to plot with a 2D image, and thus not shown here. This is a big reason why most economics classes only plot price vs. demand. Nevertheless, innovators should also have a good idea of which customers demand high performance and which are satisfied with low performance.

Assuming technologies will diffuse to everyone also hides the issue of how fast this will occur. Many people have bought into the idea that technologies diffuse faster now than in the past. They like to compare the diffusion of computers, the Internet, and smart phones with the diffusion of automobiles and electricity, while ignoring other recent technologies that have not rapidly diffused, or at all. These include solar cells, solar water heaters, wind turbines, cellulosic ethanol, electric vehicles, tablet computers, hydrogen vehicles, augmented reality, virtual reality, factory robots, commercial drones, blockchain, and hyperloop.

Some of these have been diffusing slowly because they do not provide a good value proposition (and may never diffuse), while others are diffusing slowly because the rates of improvement are very slow. Computers, the Internet, and smart phones experienced much more rapid improvements in cost and performance (30% to 40% per year) than did automobiles and electricity, and thus diffused faster than autos and electricity. Gordon Moore liked to point out that if autos experienced improvements as fast as did microprocessors, they would be now travelling at millions of miles per hour and cost less than milk does. Rates of improvement impact on the rates of diffusion and thus need to be considered. Theoretically speaking, they determine how fast the supply chain moves to the upper right of a traditional supply and demand curve thus moving the price to lower levels and quantity upward.

The other factor missed in traditional business models is the economics of the activity or industry. Behind the success of personal computers was the economics of preparing documents. Before PCs, we wrote them out by hand, gave them to secretaries, and then penciled in revisions to the drafts made by secretaries. At first secretaries used PCs to enter our handwritten documents, but gradually, white collar workers did the first drafts and revisions, thus reducing the need for secretaries.

The economic advantage of buying books on Amazon was also immediately obvious. Unable to find many of the books we wanted in small bookstores before 1995, we resorted to calling publishers, filling out forms that had been distributed in our offices, or visiting mega-bookstores with tens if not hundreds of thousands of books (e.g., Powell’s books in Portland). Amazon simplified this activity, making it easier to find books that we wanted. Free reports, news, and other information have also changed the economics of information, making it easier for us to obtain it, but of course the quality has become questionable, which is a different issue.

How can we apply these lessons to today’s technologies? Ride sharing services are barely different than the taxi services they are replacing because they use the same types of vehicles, roads, and drivers as do taxis, and the main difference between the new and the old is that users can book a taxi using their phones, thus eliminating the dispatcher. Therefore, it’s not surprising that these services have not led to higher productivity in the form of more people being transported per time[2], lower costs per trip, or even less car ownership and thus a smaller need for parking spaces. Instead, ride hailing has increased congestion just as an increase in the number of taxis or private vehicles would have done, something that could have been understood by analyzing the underlying technology and the economics of the industry. Just as important, there are few if any improvements occurring. Unlike the improvements in computers, phones, and the Internet in both cost and performance, which came from Moore’s Law and other underlying improvements, cars; roads, and even smart phone apps do not get appreciably better.

What should have been done? Congestion and the scarce resource of roads should have been the starting point in a search for better services, and not automating the dispatcher. Entrepreneurs should have been trying to increase the number of shared rides, so that roads could provide more people-miles moved while also reducing travel time. Even driverless vehicles should be aiming for the same goal through shorter spacings between vehicles, and not enabling the vehicle to handle every single contingency. The latter also ignores the importance of killer apps and customers, which part of the driving experience can driverless vehicles improve and for which customers? Which cities, weather types, roads, parking lots, and drivers can be most economically addressed?

Consider AI, a technology for which some claim it can do everything, when like other technologies there will be applications it first conquers followed by others. A simple distinction can be made between the virtual and physical worlds. AI is widely used in news, advertising, finance, and other virtual applications, at least according to Google and Amazon, while driverless vehicles and health care are big failures. Moving forward we can expect AI products and services to succeed faster in the virtual than physical worlds.

Another distinction can be made between applications that require high accuracy and those that do not. A recent report on the state of AI[3] found that reducing the ImageNet error rate from 11.5% to 1% requires exponential increases in computing power, and thus exponential increases in costs. On the other hand, the training time needed for the same ImageNet classification has been falling rapidly because the computing resources to achieve the same accuracy has been decreasing by a factor of 2 every 16 months. Thus, while “exponential increases in computing power are needed to sustain improvements in image classification accuracy using standard data sets such as ImageNet,” achieving the same accuracy is becoming easier. This suggests that companies should focus more on applications that do not require high accuracy and thus can be more economically achieved than those requiring high accuracy.

The other factor relevant to the economics of AI is the economics of the industry. Too many data scientists focus on labor, and thus there are too many reports of occupations being eliminated. The problem with this argument is that labor is not the largest cost in most industries, it is capital like cars, land, buildings, and other machines (and sometimes energy). The modern world is not one of toiling workers, it is world of machines (including computers) often operated by well-educated professionals. Factories, farms, mines, air travel, logistics, banks, insurance, health care, and other businesses are highly automated with capital and often energy costs higher than labor costs. If we want lower costs, we need more productive machines and land, something that comes from targeting the right applications, the killer applications. Yet analyses of AI focus almost exclusively on its impact on jobs, when what we want to know is how can AI improve the productivity of our machines, buildings, and land, and reduce energy costs?

In summary, whatever the technology, there are economics to understand, of both the technology and the industry. The economics of the technology depends on its characteristics, something that I have addressed in many previous papers[4], and something indirectly related to how the technology works. The economics of the industry depends the cost and value drivers, and which costs determine value for customers. Understanding these economics should form the basis for the choice of value propositions, killer applications, and customers to target.

[1] https://jeffreyleefunk.medium.com/only-6-of-73-unicorn-startups-are-profitable-and-none-did-recent-ipos-287d5c7ac8d0

[2] https://promarket.org/the-uber-bubble-why-is-a-company-that-lost-20-billion-claimed-to-be-successful/